With the hype of ChatGPT/LLMs I thought about writing a blog post series on the LLMs. This series would be more on Finetuning LLMs and how we can leverage LLMs to perform NLP tasks. We will start with discussing different types of LLMs, then slowly we will move to fine-tuning of LLMs for specific downstream…

Author: Aritra Sen

NLP: Toxic comment classification with TorchText & Pytorch Lightning

In this blog post we would try to do a multilabel (6 target classes) NLP Toxic comment classification for a previously Kaggle hosted competition. Please see below the competition overview from the Kaggle page: Discussing things you care about can be difficult. The threat of abuse and harassment online means that many people stop expressing…

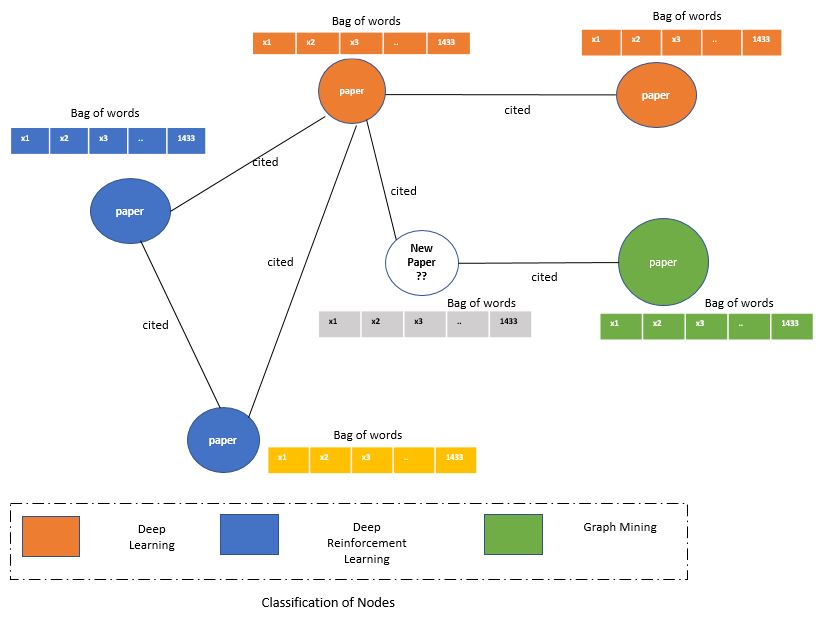

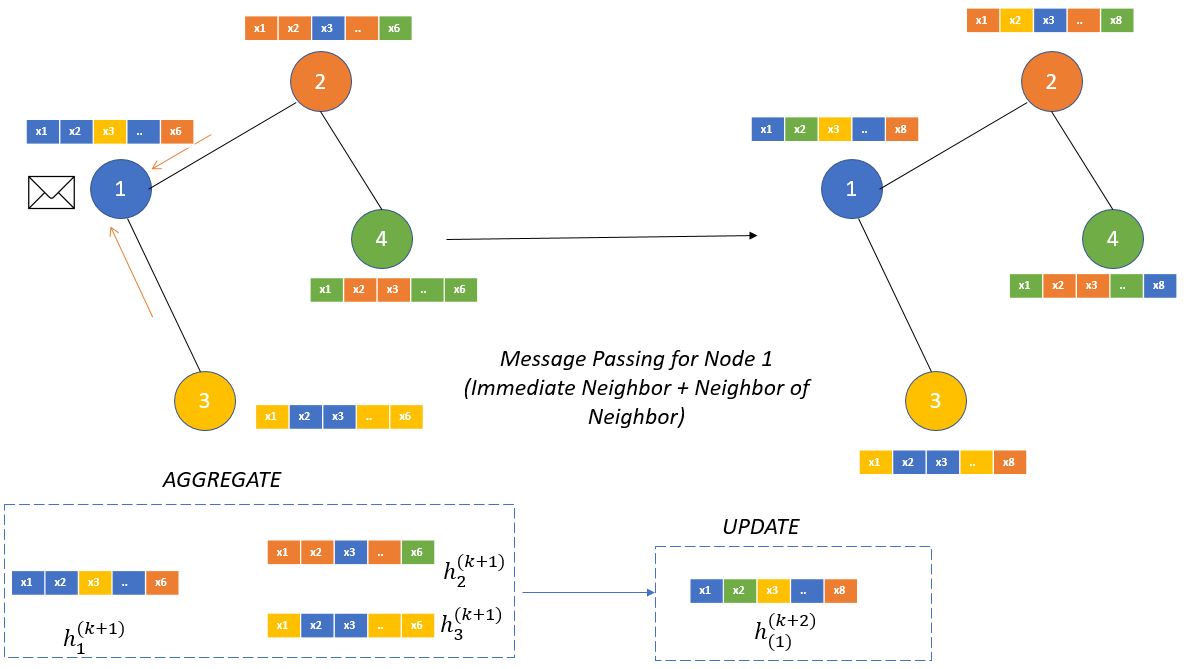

Graph Neural Network – Node Classification with PyG – 2.1

After covering the basics of GNN and PyG , now let’s start doing the actual implementation of GNN model training and inference. In this post we will use Cora citation dataset, where each node is a paper and edges refer to the citation. There seven types of papers (Example: Paper of DL, RL, Graphs) which…

Graph Neural Network – Pytorch Geometric (PyG) Intro – 2.0

In the last two posts the very basics and inner working of the GNN has been covered. Now we will start with the implementation of GNN with the help of pytorch geometric. In case you are not familiar with the working of pytorch please through my previous tutorial series of Deep Learning with Pytorch.In this…

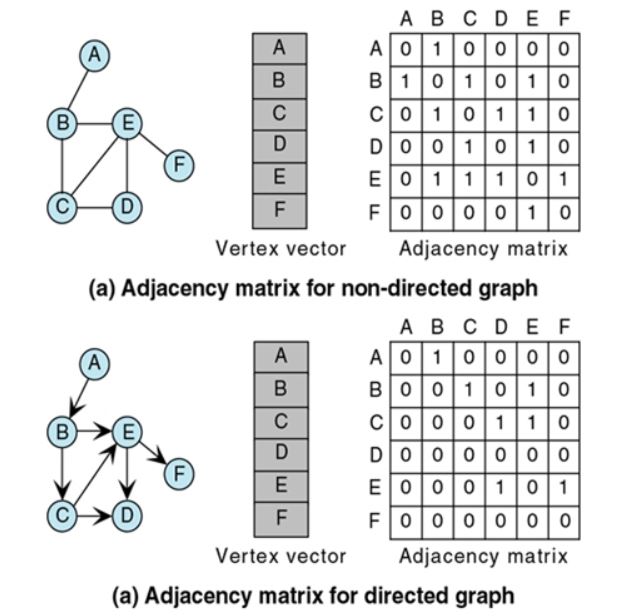

Graph Neural Network – Getting Started – 1.0

In this new blog post series we will talk about Graph neural network which is according to the ‘State of AI report 2021’ has been stated as one of the hottest field of the AI research. This blog post series will be mostly implementation oriented , however required theory will be covered as much as…

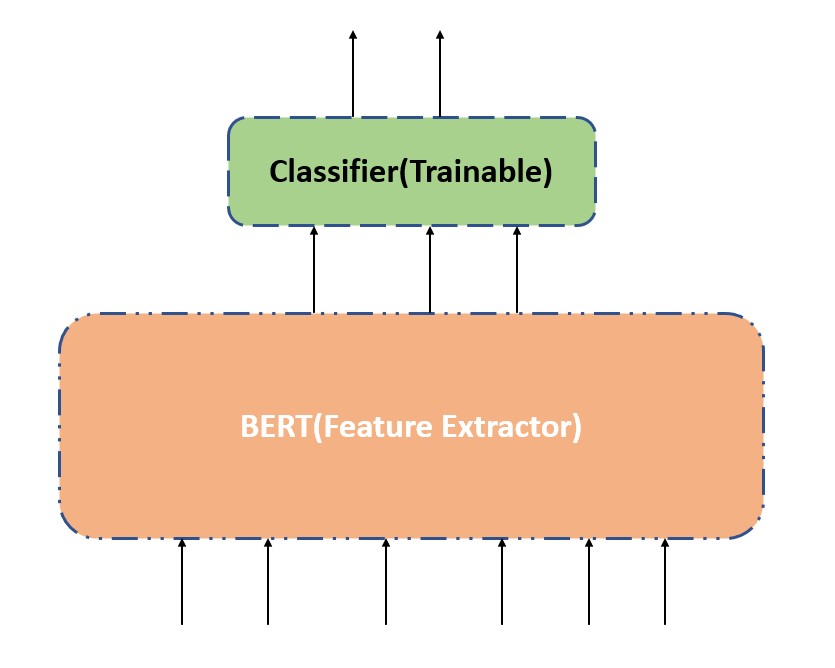

1.2 – Fine Tune a Transformer Model (2/2)

In the last post we talked about that we can fine tune a BERT model using below two techniques – Update the weights of the pre-trained BERT model along with the classification layer. Update only the weights of the classification layer and not the pre-trained BERT model. This process becomes as using the pre-trained BERT model as…

1.1 – Fine Tune a Transformer Model (1/2)

In the last post , we have talked about Transformer pipeline , the inner workings of all important tokenizer module and in the last we made predictions using the exiting pre-trained models. During fine-tuning, we can adjust the weights of the model in the following two ways: Update the weights of the pre-trained BERT model along…

1.0 – Getting started with Transformers for NLP

In this post we will go through about how to do hands-on implementation with Hugging Face transformers library for solving few simple NLP tasks, we will mainly talk about hands-on part , in case you are interested to learn more about transformers/attention mechanism , below are few resources – Getting started with Google BertNeural Machine…

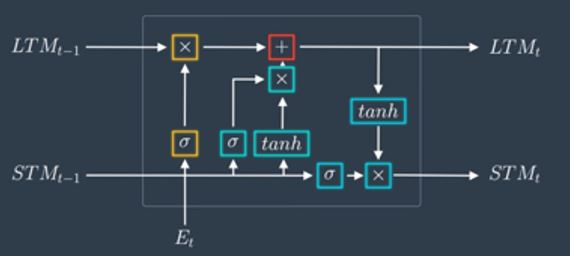

Deep Learning with Pytorch -Text Generation – LSTMs – 3.3

In this Deep Learning with Pytorch series , so far we have seen the implementation or how to work with tabular data , images , time series data and in this we will how do work normal text data. Along with generating text with the help of LSTMs we will also learn two other important…