Transfer learning is the process of transferring / applying your knowledge which you gathered from doing one task to another newly assigned task. One simple example is you pass on your skills/learning of riding a bicycle to the new learning process of riding a motor bike.

Referring notes from cs231 course –

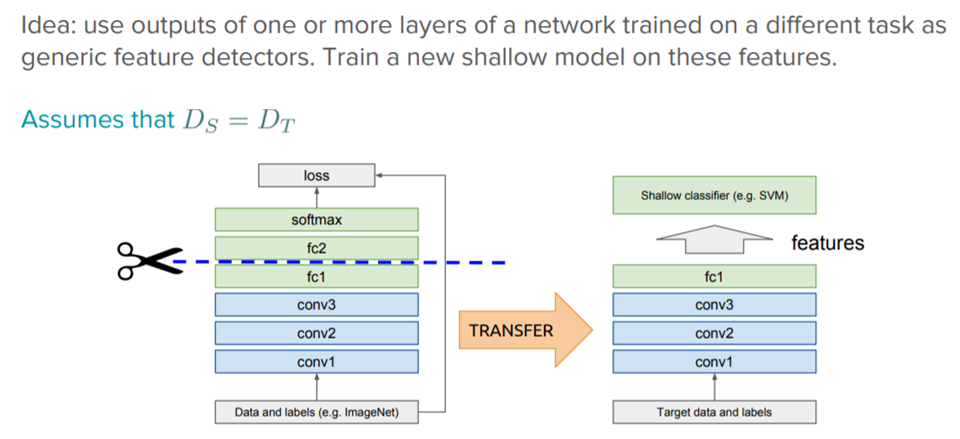

In practice, very few people train an entire Convolutional Network from scratch (with random initialization), because it is relatively rare to have a dataset of sufficient size. Instead, it is common to pretrain a ConvNet on a very large dataset (e.g. ImageNet, which contains 1.2 million images with 1000 categories), and then use the ConvNet either as an initialization or a fixed feature extractor for the task of interest.

For computer vision, some of the popular pretrained model details with description link given below –

In below tutorial – we will freeze the weights for all of the network except that of the final fully connected layer. This last fully connected layer is replaced with a new one with random weights and only this layer is trained. I have uploaded the dataset in Kaggle for ease of access and also there is a kernel version of this tutorial.

Press up/down/right/left arrow to browse the below notebook.

Do like , share and comment if you have any questions.