In the last post we saw using GPU , how we can speed the training process of Neural Network in Pytorch. Apart from GPU we can also use below mentioned three techniques to speed the training process , lets discuss them in brief before diving into the coding –

1. Normalization of input data:

We have already seen how to normalize the input data using the torchvision’s transforms.Compose (which work’s for images). In case your data is not images , you can also do the normalization using sklearn

as shown below –

Above example from Andrew Ng’s deep learning course explains why normalization works – with unnormalized data – contours of cost function is very elongated , however in case of normalized data cost function has a proper rounded shape where it’s much easier to reach global minimum , which makes training process faster.

2. Batch Normalization:

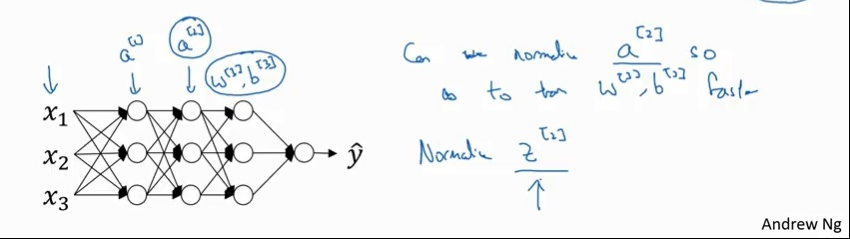

So far we have only normalized the input data , what about – when is your network is very deep then you would also want to normalize the inputs for the deeper layers (as shown below for the faster learning of parameters – w3 , b3).

In Batch Normalization , we generally normalize the output of the linear layer and before applying the non linear activation functions which we will see in the coding section. Apart from solving the slow training process , we can also solve the co-variance shift problem with batch normalization.

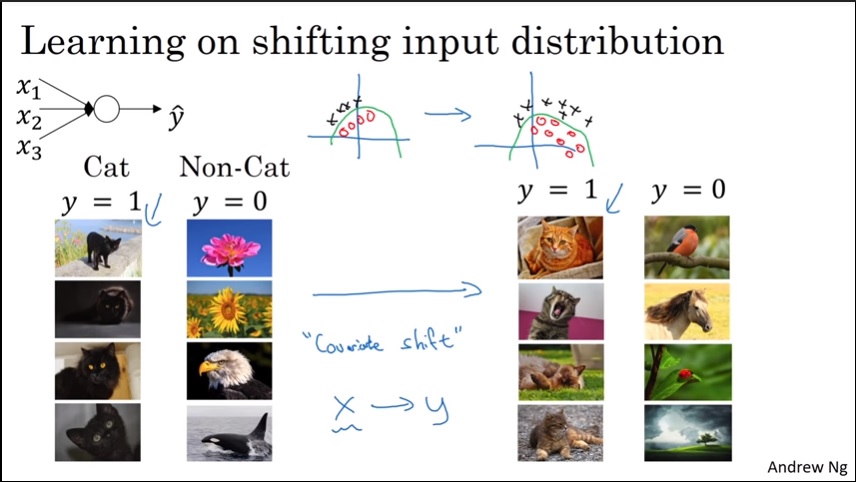

For the above example , say you have trained your cat classifier on the black and white cat images , now you need to predict on the color images which has a different distribution than the B/W images (co-varinace shift) . So when co-variance shift happens or our data distribution changes , we need to retrain the model. This can be solved with the batch normalization , which will keep the mean and variance constant in way keeping the data distribution same.

Important point to remember that , we calculate the mean and variance from the training dataset and use the same in the test dataset.

3. Learning Rate Scheduler:

Improper Learning rate also can be a problem in slow training or over shooting the global minimum as shown in the below image –

Learning scheduler do helps in speeding up the training , for examples you can have much higher learning rate when the error is high and gradually you can decrease the learning rate with certain no of epochs when you are approaching the global minimum. Pytorch code implementation will make this more clear.

Let’s get into the coding part now.

Do like , share and comment if you have any questions.