In this Deep Learning with Pytorch series , so far we have seen the implementation or how to work with tabular data , images , time series data and in this we will how do work normal text data. Along with generating text with the help of LSTMs we will also learn two other important concepts – gradient clipping and Word Embedding.

Gradient Clipping:

The problem of exploding gradients is more common with recurrent neural networks like LSTMs given the accumulation of gradients unrolled over many input time steps or sequence length. In neural network training , once the forward propagation is done then we calculate the loss by comparing the predicted value with actual after that we update the weights using the derivative of the loss and learning rate. Problem comes when these values of these gradients becomes extremely large or small. The weights can take on the value of an “NaN” or “Inf” in these cases of Vanishing or Exploding gradients and network almost stops learning.

Exploding gradients is also a problem in recurrent neural networks such as the Long Short-Term Memory network given the accumulation of error gradients in the unrolled recurrent structure.

On solution to this problem is Gradient clipping where we can force the gradient values to remain in a specific minimum or maximum value if the gradient exceeded a range. In code example below how we can do this in Pytorch.

Word Embedding:

Whenever we work text , we need to convert these texts into numbers before feeding them in the Neural Network.On of the simplest and easy to understand way is to do one hot encoding of these words and then feed them into the neural network. Below is an example of one hot encoding for the below sentence –

I am going to office.

Here we have 5 unique words so the vocabulary length is 5 and can represented as shown below where each vector is a single word.

1 0 0 0 0 – I

0 1 0 0 0 – am

0 0 1 0 0 – going

0 0 0 1 0 – to

0 0 0 0 1 – office

This works well when we have small no of vocabulary , however this one hot encoding suffers from below mentioned problems –

> Consumes lot of memory to store the words

> No relation or context between the words has been preserved.

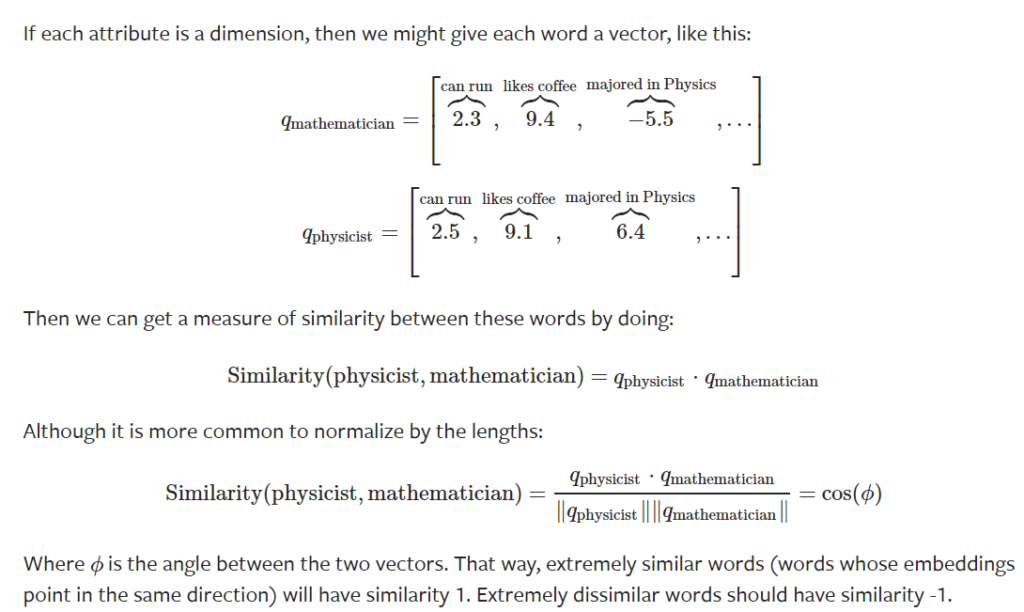

Also in this example each word is independent and no notion of similarity is maintain. We might want to store the numerical values of these words such a way such that semantic similarity is maintained. Taking the below example from Pytorch official tutorial –

Suppose we are building a language model. Suppose we have seen the sentences –

- The mathematician ran to the store.

- The physicist ran to the store.

- The mathematician solved the open problem.

In our training data we might got the below sentence –

The physicist solved the open problem.

Now how can we store this semantic similarity that mathematician and physicist is good at performing similar tasks(or attributes). So if we treat each attributes as dimensions and we can assign (or the neural network learns by training) similar values for mathematician and physicist then in that multidimensional space they would be close to each other as shown below –

Below is a screenshot from the generated text –

Do like , share and comment if you have any questions.

Thank you for such a nice explanation. I will try to implement it and come back.