Generative AI: LLMs: How to do LLM inference on CPU using Llama-2 1.9

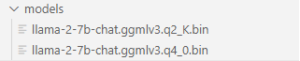

In the last few posts, we talked about how to use Llama-2 model for performing different NLP tasks and for most of the cases I have used GPU in Kaggle kernels. Now there can be requirements to that you don’t have GPU and you need to build some apps using CPU only. In this short … Continue reading Generative AI: LLMs: How to do LLM inference on CPU using Llama-2 1.9

0 Comments