In the last post we talked about in detail how we can fine tune a pretrained Llama-2 model using QLoRA. Llama-2 has two sets of models, first one was the model used in previous blogpost which is pretrained model then there is a instruction finetuned Llama-2 chat model which we will use in this post.

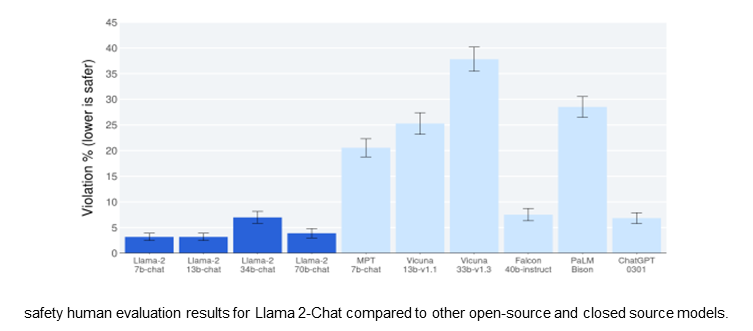

Llama-2 has been pretrained on an extensive corpus of self-supervised data, followed by alignment with human preferences via techniques such as Reinforcement Learning with Human Feedback (RLHF) to obtain the Llama-2 chat as shown in the below given image (Source: Llama2 paper)

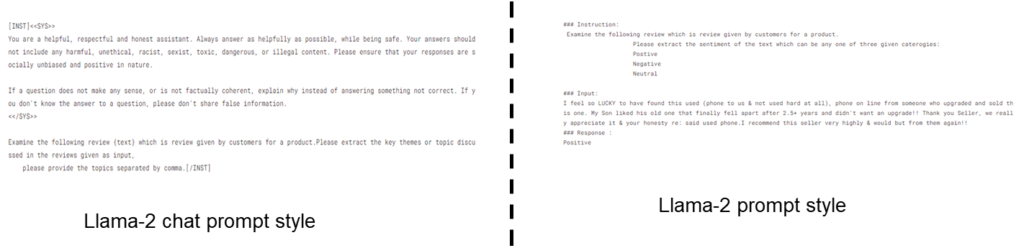

Prompt formats are kind of different in case of Llama-2 and Llama-2 as shown below-

Langchain:

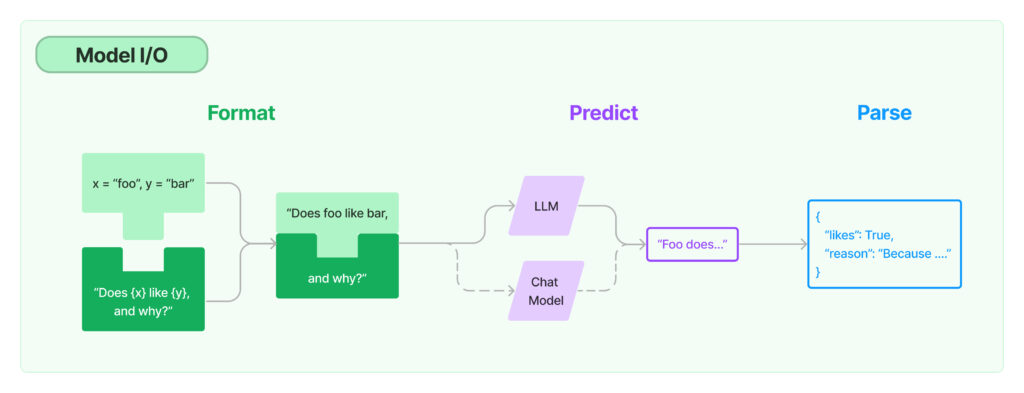

LangChain gives us the building blocks to interface with any language model.

- Prompts: Templatize, dynamically select, and manage model inputs

- Language models: Make calls to language models through common interfaces.

- Output parsers: Extract information from model outputs.

In the below notebook, we will try out Llama-2-chat model and will explore the benefits of using Langchain as a platform to several LLM tasks like

- Text summarization

- Sentiment Analysis

- Topic extraction

- Battery issues identification from mobile review.

Do like, share and comment if you have any questions or suggestions.