In this new blog post series we will talk about Graph neural network which is according to the ‘State of AI report 2021’ has been stated as one of the hottest field of the AI research. This blog post series will be mostly implementation oriented , however required theory will be covered as much as possible and required resource to understand the concepts in detail will be provided.

Now a days we can see graph data everywhere starting Medical/Biology field (molecules / DNA -proteins interactions can be represented as graph) , Social Networks , Web Graph (World Wide Web is another huge graph data) and many navigation systems like Google Maps / Uber uses graphs networks to solve road networks / navigation problem. Recommendation system also started using graphs as primary object to provide optimal recommendations.

To begin with , Graph is generally a collection of nodes( collection of nodes are also called Vertices) and edges , below is an example given –

V (Vertices) = {0, 1, 2, 3}

E (Edges) = {(0,1), (1,2), (2,3), (0,3)}

G (Graph) = {V, E}

Furthermore , we will talk about different types of graphs , how graphs are generally represented.

Generally graphs are classified as below –

Directed graph:

A graph that is made up of a set of vertices connected by directed edges.

Undirected graph:

A graph where edges does not have any directions is called undirected edges.

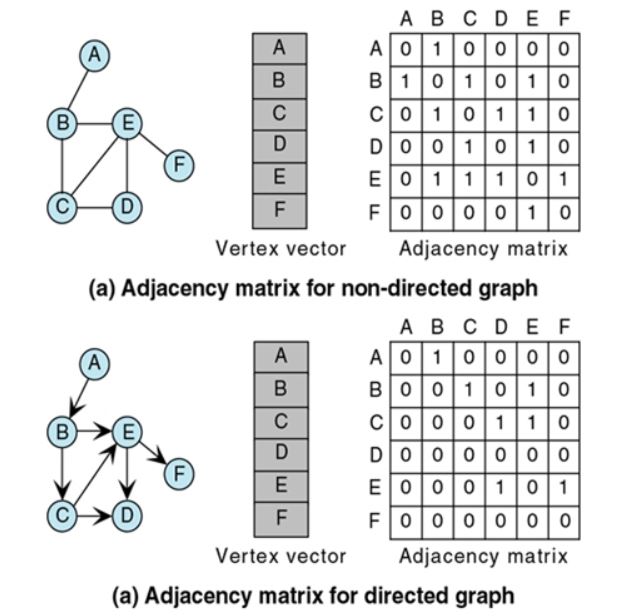

Adjacency matrix (2D matrix) is mostly used to represent a graph where rows and columns are denote vertices. The values of this matrix Aij are defined as:

Example :

Representing the Caffeine molecule from graphs:

Molecules can be represented through of as a group of Atoms held together with chemical bonds. Atoms can be corresponded to the different chemical elements such as carbon(C) , oxygen(0) , nitrogen (N) or hydrogen (H). Bonds between the atoms can also be of different types such as single bond or double bond.

We can represent the molecule with undirected graph with a node label matrix which is an one-hot representation of the atom type of the node along with a edge label matrix where each row is an one-hot representation of associated edge type (single or double bond) as shown below –

GNN can be used to solve multiple machine learning tasks with different kind of prediction problems as shown below –

Why working with Graphs are different than traditional machine learning problems?

1. Graphs exists in non-Euclidian (neither 2D or 3D) space which makes it harder to analyze or visualize Graphs data.

2. Graphs does not have any fixed form; they are dynamic in nature. Two different graphs can have same adjacency matrix or same graph can be represented with different adjacency matrix (by varying the ordering of the nodes). For these reasons while designing the solution for a graphs problem we have to have a strict prior of permutation invariance (which means that ordering of the nodes does not affect the output).

To address the issues mentioned above different types GNN architectures has been developed as shown below –

GCNs (Graph Convolution Networks)

GAT (Graph Attention Network)

In the next post we will go through the very basics of message passing Technique in the GCNs and will try to implement a graph machine learning problem.

Thanks for reading, please comment in case if you have any questions.