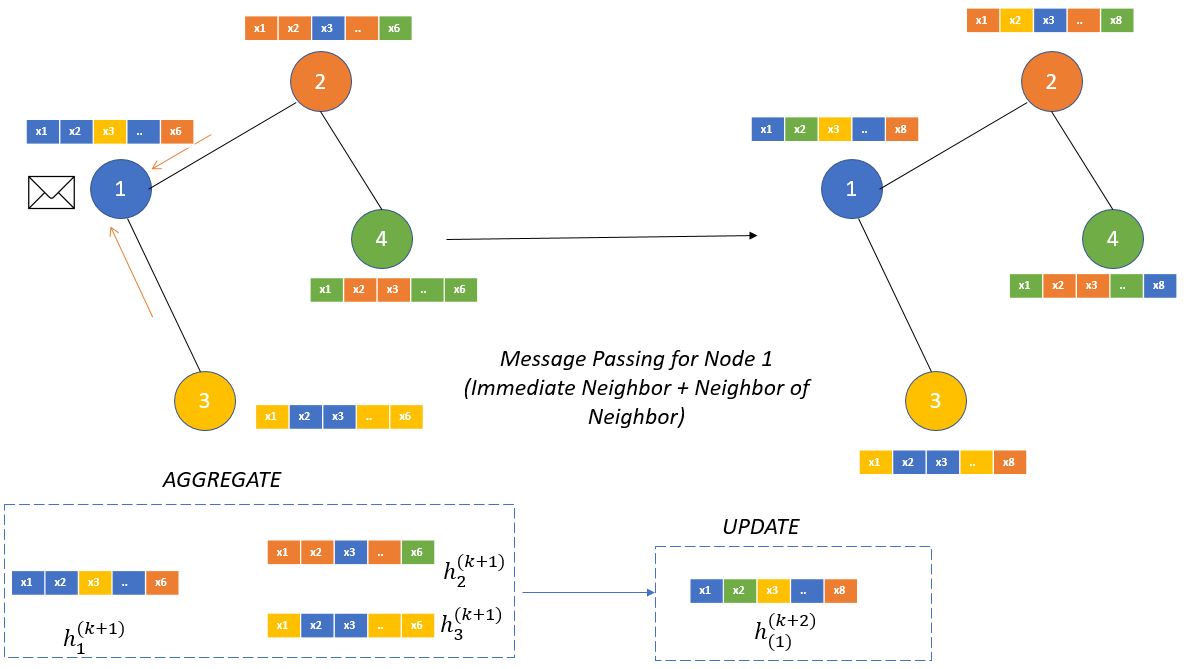

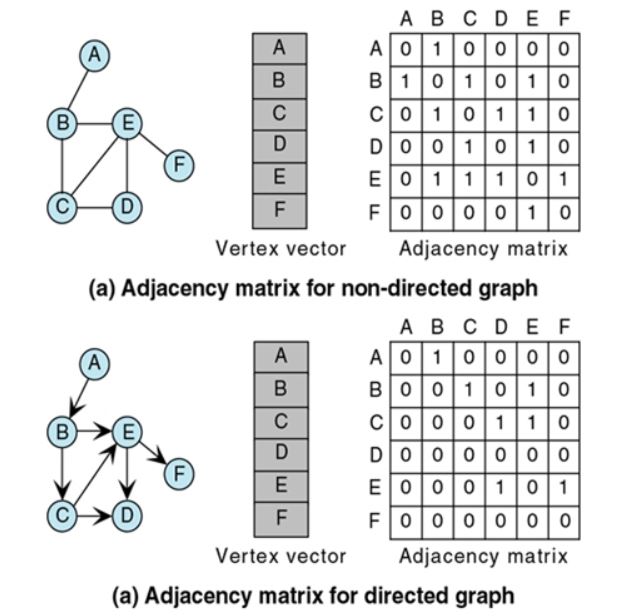

In the previous post we talked about the basics of Graphs , adjacency matrix and why representation of Graph data and using the same for Machine Learning tasks are different than other forms of data. In this post I will try to explain the very basic Message Passing technique used in Graph Neural Networks. In…

Tag: deep-learning

Graph Neural Network – Getting Started – 1.0

In this new blog post series we will talk about Graph neural network which is according to the ‘State of AI report 2021’ has been stated as one of the hottest field of the AI research. This blog post series will be mostly implementation oriented , however required theory will be covered as much as…

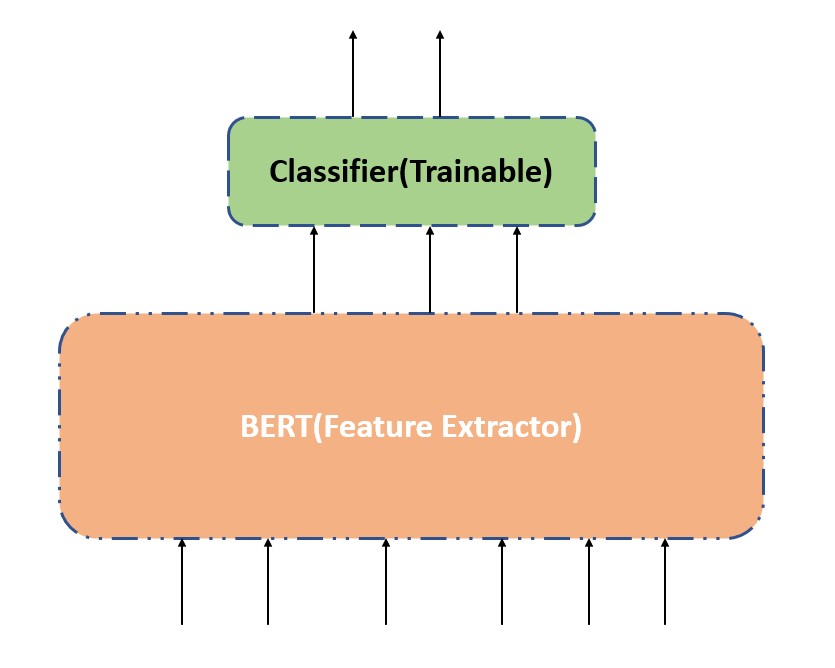

1.2 – Fine Tune a Transformer Model (2/2)

In the last post we talked about that we can fine tune a BERT model using below two techniques – Update the weights of the pre-trained BERT model along with the classification layer. Update only the weights of the classification layer and not the pre-trained BERT model. This process becomes as using the pre-trained BERT model as…

1.0 – Getting started with Transformers for NLP

In this post we will go through about how to do hands-on implementation with Hugging Face transformers library for solving few simple NLP tasks, we will mainly talk about hands-on part , in case you are interested to learn more about transformers/attention mechanism , below are few resources – Getting started with Google BertNeural Machine…

Deep Learning with Pytorch -Text Generation – LSTMs – 3.3

In this Deep Learning with Pytorch series , so far we have seen the implementation or how to work with tabular data , images , time series data and in this we will how do work normal text data. Along with generating text with the help of LSTMs we will also learn two other important…

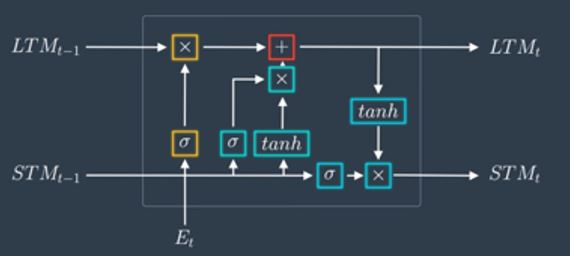

Deep Learning with Pytorch -Sequence Modeling – LSTMs – 3.2

In the previous article related to RNN , we understood the architecture of RNN , RNN has the problem working poorly when we need to maintain the long term dependencies (earlier layers of RNNs suffers the problem of vanishing gradients).This problem has been almost solved with the new architecture of LSTMs (Long Short Term Memory)…

Deep Learning with Pytorch – Custom Weight Initialization – 1.5

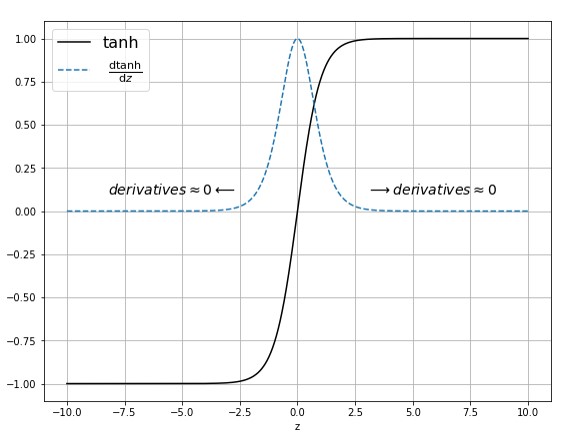

From the below images of Sigmoid & Tanh activation functions we can see that for the higher values(lower values) of Z (present in x axis where z = wx + b) derivative values are almost equal to zero or close to zero. So for the higher values of Z , we will have vanishing gradients…

Deep Learning with Pytorch -Sequence Modeling – Time Series Prediction – RNNs – 3.1

In the previous post of this series , we learnt about the intuition behind RNNs and we also tried to understood how we can use RNNs for sequential data like time series.In this post we will see a hands on implementation of RNNs in Pytorch.In case of neural networks we feed a vector as predictors…

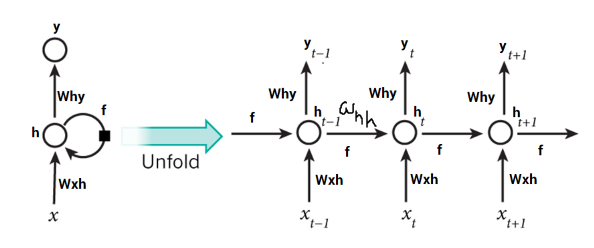

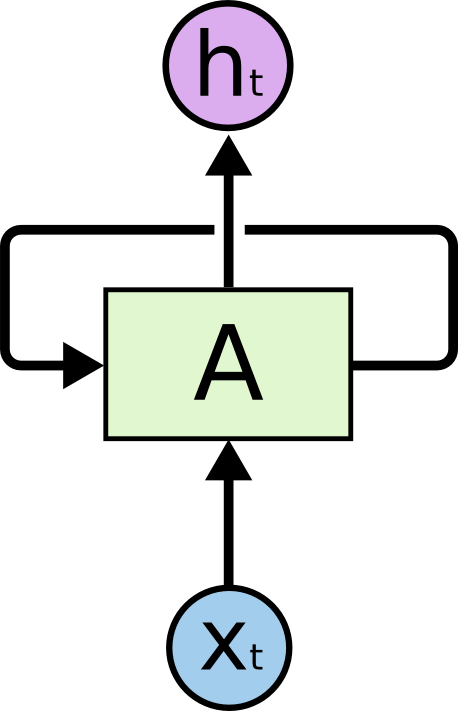

Deep Learning with Pytorch -Sequence Modeling – Getting Started – RNN – 3.0

In CNN series , we came to know the limitations of MLPs how it can be solved with CNNs. Here we are getting started with another type of Neural Networks they are RNN(or Recurrent Neural Network). Some of the tasks that we can achieve with RNNs are given below – 1. Time Series Prediction (Stock…

Deep Learning with Pytorch -CNN – Transfer Learning – 2.2

Transfer learning is the process of transferring / applying your knowledge which you gathered from doing one task to another newly assigned task. One simple example is you pass on your skills/learning of riding a bicycle to the new learning process of riding a motor bike. Referring notes from cs231 course – In practice, very…