In the last post we talked about how to do In-context finetuning using few shot techniques, In-context finetuning works when we don’t have much data, or we don’t have access to the full model. This technique has certain limitations like the more examples you add in the prompt the context length increases a lot and…

Tag: transformers

1.2 – Fine Tune a Transformer Model (2/2)

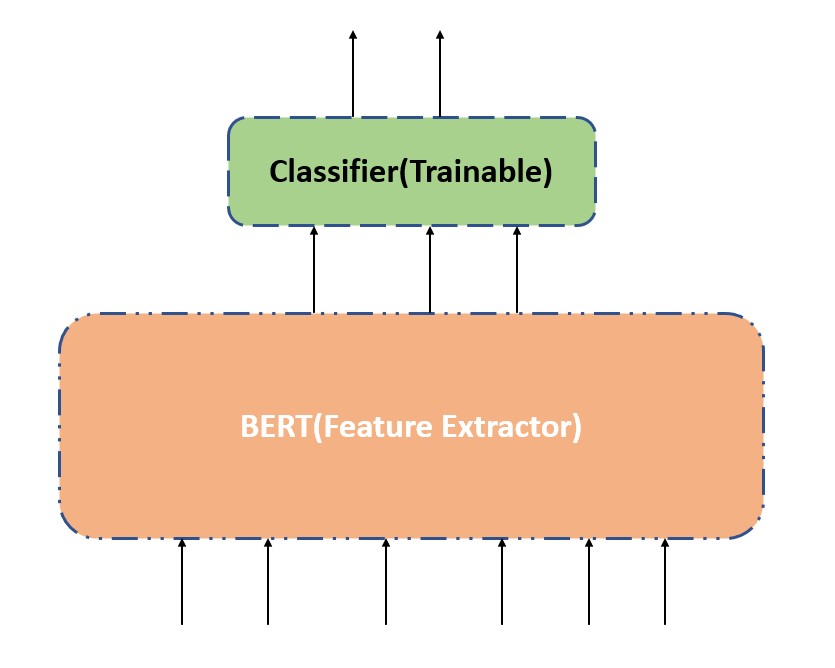

In the last post we talked about that we can fine tune a BERT model using below two techniques – Update the weights of the pre-trained BERT model along with the classification layer. Update only the weights of the classification layer and not the pre-trained BERT model. This process becomes as using the pre-trained BERT model as…

1.0 – Getting started with Transformers for NLP

In this post we will go through about how to do hands-on implementation with Hugging Face transformers library for solving few simple NLP tasks, we will mainly talk about hands-on part , in case you are interested to learn more about transformers/attention mechanism , below are few resources – Getting started with Google BertNeural Machine…